Integrating AI into your workflow is no longer optional—it’s a game-changer. AI automates repetitive tasks, analyzes data quickly, and drives smarter decision-making, saving time and boosting productivity. In industries like ours, augmented reality (AR), AI is used to create immersive, personalized experiences by analyzing user behavior, generating real-time textures and even creating 3D models.

In this article, GEENEE’s immersive developer, Alex Grishin, explains how he uses AI to streamline his workflow to create realistic WebAR experiences.

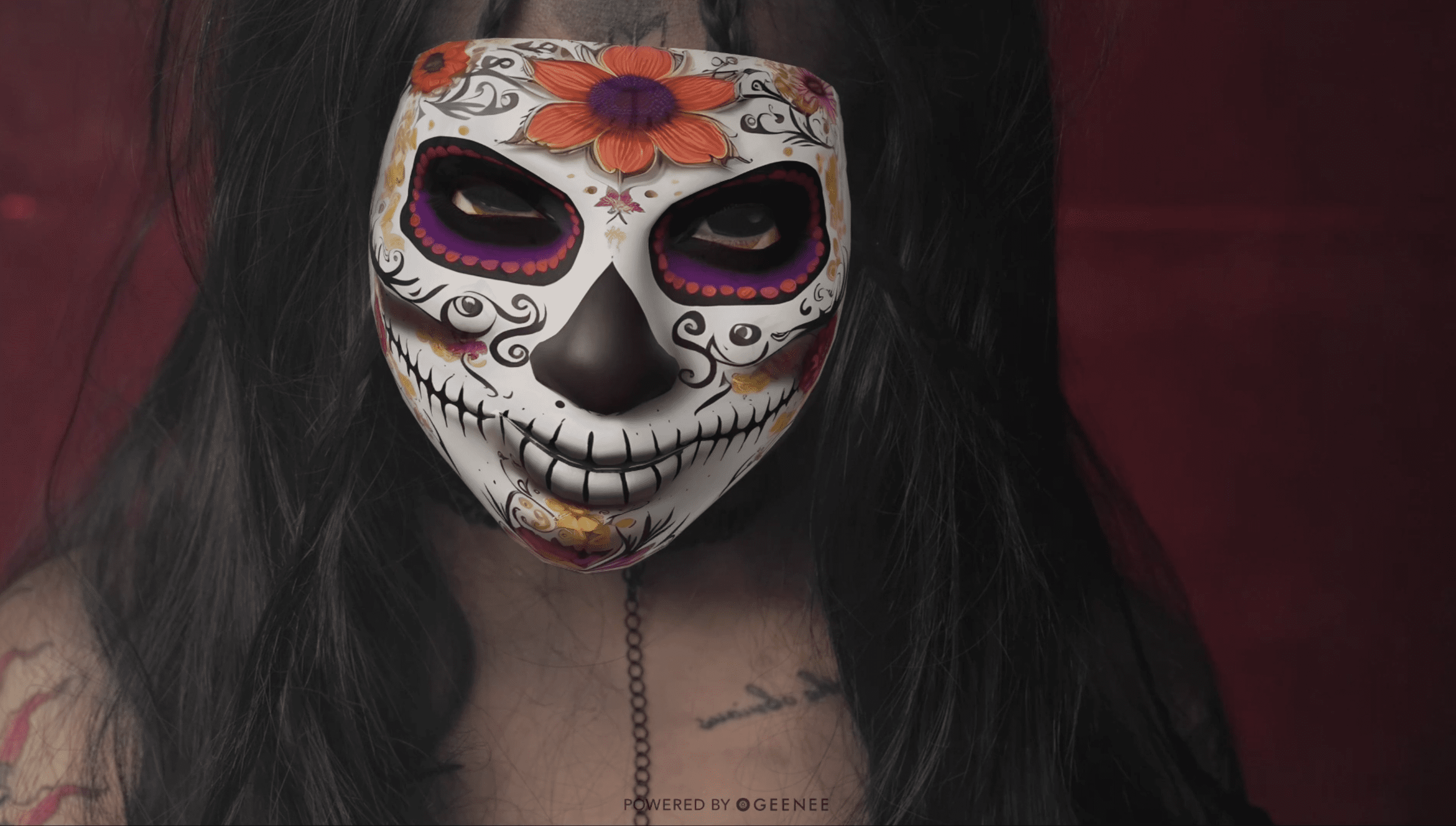

In augmented reality (AR) content, textures play a crucial role, as they allow for the creation of realistic surfaces and materials for virtual objects, whether it’s masks, clothing, characters, or environments. Textures add detail and depth, making the integration of these elements into the virtual scene more natural and believable.

High-quality textures are especially important for AR because they determine how realistic the virtual content looks. For example, textures for AR masks help accurately display skin details, makeup, or additional elements (such as patterns and decorations), creating the impression of real interaction with the user’s face. For clothing textures, it is essential to convey the variety of fabrics, their texture, and their reaction to lighting, allowing virtual outfits in AR try-ons to appear realistic.

With the advent of modern neural network-based image generation technologies, the possibilities for creating such textures have significantly expanded. Generative models like GANs and diffusion models automate the texture creation process, producing detailed images tailored for AR projects. This is particularly useful when creating diverse content, whether for masks, clothing, or virtual characters, ultimately enhancing the user experience and expanding AR technology capabilities.

Image Generation Technologies

Image generation using neural networks has become an essential tool for creating various textures used in augmented reality (AR). Modern generation approaches include using technologies like Generative Adversarial Networks (GANs) and diffusion models. These methods enable the creation of detailed and realistic textures that can adapt to various conditions and AR content needs.

- Generative Adversarial Networks (GANs) involve two neural networks — a generator and a discriminator — trained simultaneously. The generator creates images based on random noise, attempting to generate realistic textures, while the discriminator evaluates them and determines if they are genuine or fake. This process trains the model to create high-quality, diverse textures that can be used for masks, clothing, characters, and other objects in AR applications.

- Diffusion models, on the other hand, operate on a different principle. They are trained by sequentially adding and removing noise from an image to learn how to reconstruct textures and create new ones based on existing examples. These models offer greater control over the details and style of generated textures, which is especially useful when creating unique AR content, including textures for characters or complex masks with various visual effects.

Today, popular platforms and frameworks provide access to these technologies, allowing the creation of high-quality textures. For instance, DALL·E, built into ChatGPT by OpenAI, can generate images based on text descriptions, simplifying the creation of textures for AR content with specific characteristics. Midjourney is another popular platform that allows the creation of unique, stylized textures suitable for virtual masks or clothing design. Stable Diffusion offers flexibility in adjusting generation parameters, making it a suitable tool for developing complex textures for characters and other AR elements.

These platforms and technologies give AR developers the ability to experiment and quickly create textures that meet project requirements, opening new horizons in design and improving user experiences.

Integration with Geenee WebAR SDK

To effectively use generated textures in WebAR applications with Geenee WebAR SDK for face and body tracking, several stages are necessary, starting with texture generation and preparation, and ending with their integration into web content.

Texture Generation and Post-processing:

First, textures are generated using tools like Stable Diffusion or DALL·E, based on text descriptions or sketches.After generation, textures often require refinement and optimization in Photoshop or other photo editors. In post-processing, color correction, sharpening, and artifact removal may be needed to ensure textures blend well with lighting and the characteristics of the 3D model. Layers and masks are often used to highlight specific parts of the texture, adding details or adjusting transparency for more precise application in the subsequent stage.

Applying Textures to 3D Models in Blender:

After post-processing, textures are loaded into a 3D editor like Blender. Here, the texture is applied to the model using UV mapping, which allows it to be precisely distributed across the object’s surface.

Various shaders and material settings are used so that the texture reacts correctly to light in the AR scene. For example, reflections and transparency can be adjusted for realistic skin or fabric rendering.

Real-time texture testing in Blender helps identify potential mismatches or distortions that need to be corrected before exporting the model.

These steps enable the integration of generated textures with minimal distortion and optimized performance, allowing for quick and high-quality development of WebAR content accessible to users through any device’s browser.

- The finished model with texture is exported in a format;, such as GLTF/GLB. This format preserves materials and textures, allowing easy import into the WebAR scene.

- Using the Geenee SDK, the developer links the texture and model to face or body tracking. For example, if it’s a mask, the texture will automatically track and follow the user’s facial movements, thanks to the SDK’s features.

- Applying textures to models in Babylon.js allows configuring material behavior based on lighting and viewing angle, creating an immersive and realistic effect.

Advantages

Using image generation for creating AR textures has several significant advantages, but it also faces challenges and limitations.

Time Savings:

Generating textures using neural networks like GANs or diffusion models automates the process and creates textures in minutes, greatly speeding up AR content development.

Rapid Creation of Unique Textures:

Instead of searching for or manually drawing textures, neural networks can generate textures based on given parameters, ensuring uniqueness and variety. This is especially useful for AR masks, clothing, and characters, where diverse and stylized images are needed.

Adaptability:

Generative models allow adjusting textures according to specific project requirements. For example, textures can be created to react to lighting or change appearance depending on the viewing angle, which is crucial for dynamic AR content.

Challenges and Limitations

Difficulty in Controlling the Outcome:

Neural networks enable quick texture creation, but control over their final result may be limited. Achieving the desired level of detail, the correct color scheme, or specific stylistic design can be challenging. Generated textures may contain artifacts, have incorrect proportions, or not match the initial vision.

Need for Post-processing:

To achieve the desired result, additional work in photo editors like Photoshop is often required. During post-processing, textures are manually adjusted: details are corrected, colors are set, and unwanted elements are removed. In more complex cases, textures are transferred to a 3D editor like Blender for further adjustments to ensure proper texture integration with the model and its correct display in the AR scene.

Practical Tips and Recommendations

Choosing a Platform and Setting Up the Neural Network for Texture Generation:

- When choosing a platform for texture generation, such as DALL·E (ChatGPT), Midjourney, or Stable Diffusion, consider your project’s specifics and the available tools. For quick generation of stylized textures, Midjourney and DALL·E are suitable, while for more flexibility and control, Stable Diffusion, which can be set up locally with additional parameters, is recommended.

- If you work with custom requirements, adjust the neural network by setting parameters like color palette, style, scale, or detail level. Consider creating your own dataset to train the model on textures that match the AR content’s specifics, such as virtual clothing or masks.

Integrating Textures into AR Content and Optimizing Quality:

- After generating and post-processing textures (e.g., in Photoshop), ensure that they meet the requirements of engines like Babylon.js or three.js. Export them in suitable formats (e.g., WEBP, PNG, or JPEG).

- When importing textures onto 3D models, use UV mapping in 3D editors like Blender to ensure accurate texture application on the model. Check material and shader settings so textures interact correctly with lighting and physical parameters in the AR scene.

- To optimize performance, use lower resolution textures where possible or apply format compression without significant quality loss.

Creating Textures that Interact Well with Lighting and Physics in AR:

- It is important to consider texture behavior under different lighting conditions. Use PBR (Physically Based Rendering) materials so they realistically react to light and shadows. This is especially important for clothing or masks, where textures need to look natural from various viewing angles.

- Work with normal and reflection maps to add volume and depth to the texture. These maps allow modeling light interaction with the surface, creating a more realistic effect, such as skin shine or fabric texture.

- Test textures directly in AR scenes using body and face tracking from the Geenee SDK to check how they adapt to movements and lighting in real time, making necessary adjustments.

These tips will help create high-quality textures and integrate them into AR content, ensuring realistic interaction and maximum performance on user devices.

Future Perspectives

Improvement of Algorithms and Emergence of New Technologies:

- In the future, significant improvements in image generation algorithms are expected, making textures even more realistic and adaptive. The development of neural networks like diffusion models and GANs will lead to increased accuracy and generation speed, allowing for the creation of textures with minimal artifacts and maximum detail.

- New platforms and tools integrating image generation directly into AR engines like Babylon.js or three.js will simplify texture creation and application. For instance, future tools might generate textures in real-time based on environment analysis and tracking.

New Ways to Use AR and Image Generation:

- The synergy between AR and image generation will open up opportunities for creating dynamic content that adapts to real-world conditions. For example, textures that change depending on lighting or time of day, or masks that automatically adjust to face shape and expression using real-time tracking data.

- Virtual try-on and customization in AR will reach a new level: users will be able to generate their own clothing or accessory designs on the fly using generative algorithms and see them integrated into the AR scene with high realism and interactivity.

Conclusion

These technologies have become an essential element in creating quality AR content, providing the realism and interactivity needed for successful projects. Developing and integrating such textures require skills and knowledge, but this process will become easier in the future as algorithms improve and new platforms emerge.

We suggest that developers and designers explore and implement these technologies in their projects to create innovative AR content and utilize all the possibilities of image generation to enhance the quality and variety of virtual objects and effects.

Are you an engineer that wants to learn more about AR & AI? Schedule a tech 1:1 to learn more about our SDK.